On the proper usage of commonFormat for AVAudioFile

Introduction

I started looking into AVAudioFile while working on PALApp, my exploration into the world of portable AI. The MVP I was implementing is receiving audio over BLE from a Friend wearable and storing it in an audio file on the device.

Try 1

The Friend audio samples are 16-bit little-endian integers.

To ease testing, I can simulate this by populating a buffer with a simple sine wave

let samples: [Int16] = {

var s = [Int16]()

for i in 0..<16000 {

s.append(Int16(sinf(Float(i) / 2) * Float(Int16.max)))

}

return s

}()

Given that input, I started by creating a simple AVAudioFile and an AVAudioPCMBuffer using that format, filled the buffer with the samples coming from Friend and tried to save the file.

guard let audioFormat = AVAudioFormat(commonFormat: .pcmFormatInt16, sampleRate: sampleRate, channels: 1, interleaved: false) else {

print("Error creating audio format")

return

}

guard let pcmBuffer = AVAudioPCMBuffer(pcmFormat: audioFormat, frameCapacity: 16000) else {

print("Error creating PCM buffer")

return

}

pcmBuffer.frameLength = 16000

let channels = UnsafeBufferPointer(start: pcmBuffer.int16ChannelData, count: Int(pcmBuffer.format.channelCount))

let data = samples.withUnsafeBufferPointer( { Data(buffer: $0 )})

UnsafeMutableRawPointer(channels[0]).withMemoryRebound(to: UInt8.self, capacity: data.count) {

(bytes: UnsafeMutablePointer<UInt8>) in

data.copyBytes(to: bytes, count: data.count)

}

guard let audioFile = try? AVAudioFile(forWriting: URL(fileURLWithPath: "generated.wav"), settings: audioFormat.settings) else {

fatalError("Error initializing AVAudioFile")

}

do {

try audioFile.write(from: pcmBuffer)

} catch {

print(error.localizedDescription)

}

And this crashed, the write() call was not even throwing an error, the code crashed, with a failing assertion:

CABufferList.h:184 ASSERTION FAILURE [(nBytes <= buf->mDataByteSize) != 0 is false]:

I tried to understand the error, looking into how I was filling the buffer, copying bytes, did I properly calculate the number of samples, the size of the data ?

Searching the web with that error message did not help much and my (incorrect) conclusion at the time was that somehow, AVAudioFile did not support writing to Int16 format.

Try 2

As some of the example code I saw during my search were using the Float32 format, I updated my code to only use that. Making that work, meant doing some manual conversion of the data received in Int16 format. That ended-up being several lines of code that just did not feel right.

guard let audioFormat = AVAudioFormat(commonFormat: .pcmFormatFloat32, sampleRate: sampleRate, channels: 1, interleaved: false) else {

print("Error creating audio format")

return

}

guard let pcmBuffer = AVAudioPCMBuffer(pcmFormat: audioFormat, frameCapacity: 16000) else {

print("Error creating PCM buffer")

return

}

pcmBuffer.frameLength = 16000

let f32Array = samples.map({Float32($0) / Float32(Int16.max)})

let channels = UnsafeBufferPointer(start: pcmBuffer.floatChannelData, count: Int(pcmBuffer.format.channelCount))

let data = f32Array.withUnsafeBufferPointer( { Data(buffer: $0 )})

UnsafeMutableRawPointer(channels[0]).withMemoryRebound(to: UInt8.self, capacity: data.count) {

(bytes: UnsafeMutablePointer<UInt8>) in

data.copyBytes(to: bytes, count: data.count)

}

guard let audioFile = try? AVAudioFile(forWriting: URL(fileURLWithPath: "generated.wav"), settings: audioFormat.settings) else {

fatalError("Error initializing AVAudioFile")

}

do {

try audioFile.write(from: pcmBuffer)

} catch {

print(error.localizedDescription)

}

This worked, it saved a proper WAV file that contained the audio I was expecting.

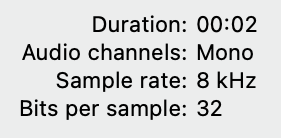

However, inspecting the file in the finder shows it uses 32 bits per sample, which is a waste considering the source data I’m working with.

Inspecting WAV file shows it uses 32 bits per sample

Try 3

Until now, I had created my code by searching the internet, looking at code snippets I could modify for my use and quickly glancing at the reference documentation in Xcode. But I never took the time to understand in more details the audio framework that Apple was providing.

So I went back to my WWDC videos collection and found one that introduced the classes I was using, session 501 from WWDC 2014, “What’s New in Core Audio” (that unfortunately is no more available from the Apple website).

In that session, they talked about the concept of the “processing format”, that is the PCM format with which you interact with the file. You can specify that with the initializer that takes a commonFormat parameter.

guard let audioFormat = AVAudioFormat(commonFormat: .pcmFormatInt16, sampleRate: sampleRate, channels: 1, interleaved: false) else {

print("Error creating audio format")

return

}

guard let pcmBuffer = AVAudioPCMBuffer(pcmFormat: audioFormat, frameCapacity: 16000) else {

print("Error creating PCM buffer")

return

}

pcmBuffer.frameLength = 16000

let channels = UnsafeBufferPointer(start: pcmBuffer.int16ChannelData, count: Int(pcmBuffer.format.channelCount))

let data = samples.withUnsafeBufferPointer( { Data(buffer: $0 )})

UnsafeMutableRawPointer(channels[0]).withMemoryRebound(to: UInt8.self, capacity: data.count) {

(bytes: UnsafeMutablePointer<UInt8>) in

data.copyBytes(to: bytes, count: data.count)

}

guard let recordingAudioFormat = AVAudioFormat(commonFormat: .pcmFormatInt16, sampleRate: sampleRate, channels: 1, interleaved: false) else {

print("Error creating recording audio format")

return

}

guard let audioFile = try? AVAudioFile(forWriting: URL(fileURLWithPath: "generated_int16.wav"), settings: recordingAudioFormat.settings, commonFormat: .pcmFormatInt16, interleaved: false) else {

fatalError("Error initializing AVAudioFile")

}

do {

try audioFile.write(from: pcmBuffer)

} catch {

print(error.localizedDescription)

}

This works, with simpler code, less data manipulation (which means it’s faster and uses less memory) and the generated WAV file uses 16 bits per sample, which matches the original data format.

Conclusion

Most of the time, if you encounter an error in your code and it feels like you’re fighting the framework, ending-up implementing some weird workaround, you’re probably doing it wrong.

It’s worth going back to basics, reading the Apple documentation and understanding the classes you’re working with. I find that searching the WWDC video archives, for the video of when a particular topic was first introduced can help a lot (pro tip: as Apple is removing those as time goes by, keep a local copy of all the videos they publish, just in case).

You can find a sample project illustrating the use of the above code on GitHub at Test_AVAudioFile.