Exploring Uncut - March 3rd, 2025

Introduction

I would like to publish more often on this blog. I really do.

In fact, I have a long list of topics to write about. I even have several draft posts that I should just finalize and publish.

One main issue is that, as I reread a post to put the finishing touches on it, I ask myself more questions. Is it totally exact ? Does it need more background information ? Why can I write this and can I prove it somehow ?

I don’t think that this is wrong per se, but it’s in conflict with the desire to publish more often. And I believe a lot of the information that will end up in those posts would already be helpful to some people right now. Even if incomplete or potentially wrong, as long as I make that clear in my writing.

I also think that my journey to get to that final post and the process I use to overcome the challenges along the way have value in themselves.

I thus decided to start this new “Exploring Uncut” series, through which I’ll make public the explorations that have been keeping me busy during the week.

I might certainly abandon some of those or put them aside for a while. There will probably be more questions than answers, failures than success, ideas than achievements. But I don’t care, at least I’ll get something out there that one person might find interesting or useful.

And if not, I will have cleared my head for a fresh week.

Shall we play a game

Getting an idea

Several weeks ago, Frank Lefebvre posted a video of a small game he was developing in Embedded Swift, in preparation of his workshop at ARCtic Conference. I find this really cool and was inspired to think about games I could develop using Embedded Swift. And why not even build a portable gaming console (we’ll see for that one).

As I have a couple of Seeed Studio Round Displays lying around, that I’ve already used in a project, I was wondering what would be a good use for it.

The format of the screen reminded me of a video game I loved to play as a kid on my Commodore 64: Gyruss. I think this can be a great fit, as the game graphics are arranged in a circle.

I did some quick research and found this YouTube video: The History of Gyruss - Arcade console documentary, from which I learned that this kind of layout / animation in games has a name: Tubeshooters.

Finding this video had the adverse side effect of loosing me quite a bit of time. Being a fan (and collector) of old computers, I did spend the next few hours looking at retro gaming / retro computer videos.

A first test

After that interlude, I started the first test. Can I make a spaceship go round the border of the display?

So first step was to get an icon for my ship. I remembered Paul Hudson mentioning a great site with free artwork that could be used for game development during an HWS+ session: Kenney.

And sure enough, I found my space ship (Space Shooter Extension · Kenney).

I already had used LVGL (Light and Versatile Graphics Library), a free and open-source graphics library widely used in the embedded space for a previous project. And I already had written some Swift types to wrap the core features I needed, like managing Screens and displaying Images.

Starting from there, I added the ability to move and rotate images and was nearly ready for some testing.

The final step was to convert my spaceship image to the appropriate format. First, since the screen has a diameter of 240 pixels, I needed to resize the icon I downloaded. But more importantly, the most efficient way to use an image from LVGL is to have it as a byte array compiled in the code, not to load it from a file (certainly not in a compressed format).

There’s an on-line Image Converter that you can use for that, but there’s also an offline version that I had installed before. I went to a terminal and used the following command

./lv_img_conv.js SpaceShip.png -f -c CF_INDEXED_2_BIT

This generates C code. Although I could use it as is and Swift C Interop would perfectly handle that, the C code is so simple that I preferred to convert it to Swift. This now looks something like

enum SpaceShip {

static private var data: [UInt8] = [

/*Pixel format: Alpha 8 bit, Red: 5 bit, Green: 6 bit, Blue: 5 bit BUT the 2 color bytes are swapped*/

0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, …

]

static let description = {

Self.data.withUnsafeBufferPointer { ptr in

lv_img_dsc_t(

header: lv_img_header_t(cf: UInt32(LV_IMG_CF_TRUE_COLOR_ALPHA), always_zero: 0, reserved: 0, w: 40, h: 30),

data_size: 1200 * UInt32(LV_IMG_PX_SIZE_ALPHA_BYTE),

data: ptr.baseAddress)

}

}()

I already thought about making a fork so the tool can directly generate the Swift code, but this is not my priority right now.

The first test was not very conclusive, as the ship was not rotating around an axis perpendicular to the screen but kind of flipping around a vertical axis in a way not really correlated to the provided angle.

The LVGL documentation is quite good, so reading it provided valuable information.

The transformations require the whole image to be available. Therefore indexed images, alpha only images or images from files can not be transformed.

Indeed as you might have noticed, when using the converter, we need to indicate a color format. And I initially used CF_INDEXED_2_BIT, the image being pretty simple, that would have limited the size.

Changing the color format to CF_TRUE_COLOR_ALPHA made the rotation work as expected.

The result

Here’s the result of the first test, a spaceship going round the screen.

The spaceship going round the screen.

It’s not super fast and there are some artefacts when the screen is redrawn, but we’ll see how this goes as we progress with the game development

I haven’t pushed any code yet at this stage but here is most of what I’ve got so far. Note that nRF Connect SDK v2.7 is using LVGL version 8.4. Most APIs have been renamed in the newer LVGL 9 versions.

@main

struct Main {

static func main() {

let lvgl = LVGL(device: display_dev)

let screen = LVGLScreen()

screen.setActive()

var spaceShip = LVGLImage(parent: screen, imageDescription: SpaceShip.description)

spaceShip.setPosition(0, 100)

spaceShip.setPivot(20, -85)

lvgl.taskHandler()

lvgl.displayBlankingOff()

var value: Int16 = 0

while true {

k_msleep(16)

value += 3

if value > 360 {

value = 0

}

spaceShip.setAngle(value * 10)

lvgl.taskHandler()

}

}

}

// MARK: LVGL

class LVGLScreen: LVGLObject, Equatable {

static private var screens = [LVGLScreen]()

init(pointer: UnsafeMutablePointer<lv_obj_t>? = lv_obj_create(nil)) {

self.pointer = pointer

Self.screens.append(self)

}

var pointer: UnsafeMutablePointer<lv_obj_t>?

static func getActive() -> LVGLScreen? {

let activeScreen = lv_scr_act()

return Self.screens.first { $0.pointer == activeScreen }

}

static func == (lhs: LVGLScreen, rhs: LVGLScreen) -> Bool {

lhs.pointer == rhs.pointer

}

var isActive: Bool {

Self.getActive() == self

}

func setActive() {

if let pointer {

lv_disp_load_scr(pointer)

}

}

deinit {

Self.screens.removeAll { $0 == self }

}

}

struct LVGL {

var device: UnsafePointer<device>

init(device: UnsafePointer<device>) {

if !device_is_ready(device) {

fatalError("Device not ready, aborting test")

}

self.device = device

}

func taskHandler() {

lv_task_handler()

}

func displayBlankingOff() {

display_blanking_off(device)

}

}

protocol LVGLObject {

var pointer: UnsafeMutablePointer<lv_obj_t>? { get }

}

extension LVGLObject {

func setPosition(_ x: Int16, _ y: Int16) {

lv_obj_set_pos(self.pointer, x, y)

}

}

public enum LVGLAlignment: UInt8 {

case `default` = 0

case topLeft

case topMid

case topRight

case bottomLeft

case bottomMid

case bottomRight

case leftMid

case rightMid

case center

case outTopLeft

case outTopMid

case outTopRight

case outBottomLeft

case outBottomMid

case outBottomRight

case outLeftTop

case outLeftMid

case outLeftBottom

case outRightTop

case outRightMid

case outRightBottom

}

struct LVGLImage: LVGLObject {

var imgDesc: UnsafeMutablePointer<lv_img_dsc_t>

var pointer: UnsafeMutablePointer<lv_obj_t>?

public init(

parent: LVGLScreen? = nil,

imageDescription: lv_img_dsc_t,

alignment: LVGLAlignment = .center

) {

guard let imgObj = lv_img_create(parent?.pointer) else {

fatalError("Failed to create image")

}

self.pointer = imgObj

imgDesc = UnsafeMutablePointer<lv_img_dsc_t>.allocate(capacity: 1)

imgDesc.initialize(to: imageDescription)

lv_img_set_src(imgObj, imgDesc)

lv_obj_align(imgObj, alignment.rawValue, 0, 0)

}

func setPivot(_ x: Int16, _ y: Int16) {

lv_img_set_pivot(self.pointer, x, y)

}

func setAngle(_ angle: Int16) {

lv_img_set_angle(self.pointer, angle)

}

}

Reviving an old project

Nearly a year ago, I posted about Portable AI devices and started exploring open source propositions in the field. I found an interesting project that back then was called Friend (it’s now called Omi) and started playing around with it. Although I generally liked what they were doing, and even made some small contributions, a few things were bothering me:

- all data was sent to a server in the cloud (they later released the source code for the server side too)

- I was not very familiar with the development stack used

- the way development efforts on the project were organized was a bit too chaotic for me

- I was more interested in learning than using an existing product and wanted to freedom to explore in whichever direction I felt interesting or intriguing

So I did assemble a device as per their instructions and wrote an iOS app that would record and store the audio on the phone. My first use case was to record work meetings and I’ll figure out later how I can make use of the data I collected.

Back then I did not have a 3D printer and quickly created a case with what I had lying around.

The original enclosure used for the device, a recycled clear plastic box with holes in it.

BTW around the same time, StuffMc pointed me to the Embedded Swift vision document and that’s how I got interested in this technology, ended presenting about it at several conferences and writing a lot about it on this blog.

Although I wanted to explore processing the data on the phone (transcribing and then being able to query using an LLM), I thought exploring that on a server first would be easier and offer more possibilities.

I thus started writing server code that could receive the audio data from the phone. As I already had iOS code in Swift, and this was an exploration project, I decided to write the server code in Swift also (and at some point rewrite the existing firmware in Swift).

Then several things happened at the same time: I took a sabbatical and did not have any meeting to record anymore, I had acquired a 3D printer and taken the device apart so I could model a new case, I hit a bug in Vapor that halted my backend development and I spent most of my time exploring Embedded Swift. The project came to an halt and the device (rather its components) slept in a drawer.

In the meantime, the bug in Vapor has been fixed, my sabbatical is over, I again attend more work meetings and I got a bit of experience modelling and printing 3D objects. As I always wanted to continue the project, now seemed like a good time to do so.

To start recording again, I wanted to put the device back together. So last week, I finished the 3D model and printed it.

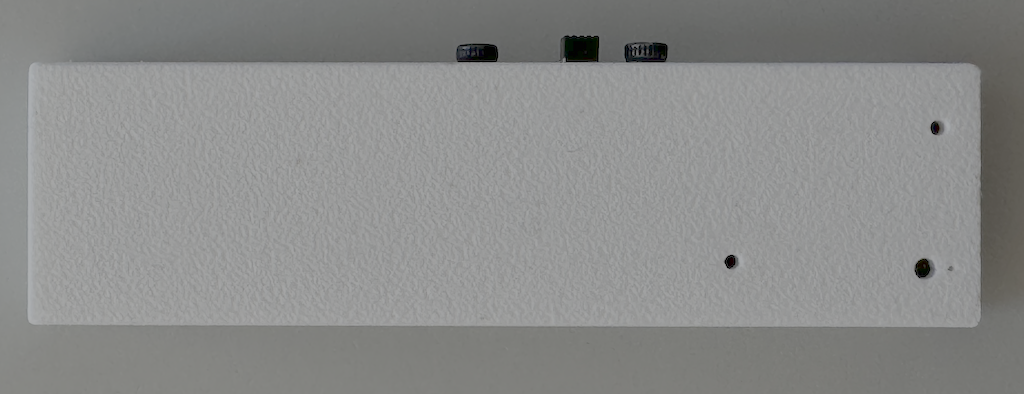

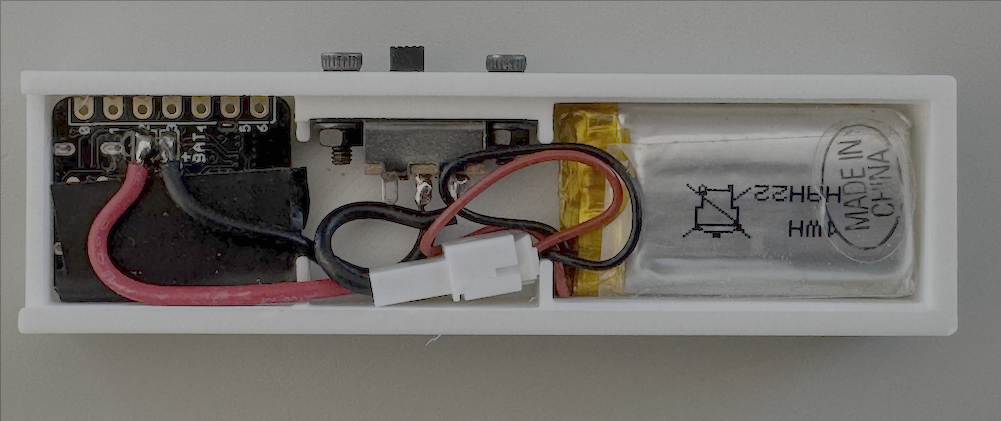

The new enclosure, looks a bit like a pack of gum. Device inside the new enclosure, pretty tightly packed.

I can now look back at the code and continue The PAL Project.

I also want to address the on-line meetings aspect. For now, I’m using Audio Hijack from Rogue Amoeba to gather the audio. We might talk about open source alternatives to Rewind.ai some other time.

Oh yes, I know there are lots of questions about these types of devices, their usefulness and certainly their commercial viability (good bye Humane) but I still want to explore the field and maybe others want to follow along.

Posting

That’s it for this week! There’s a bit more I wanted to talk about but it’ll have to wait for next week, as otherwise I will just postpone posting this, exactly the opposite of what I’m going for with this series.

Until next time, and I’d love to hear any thoughts or suggestions. You can reach me on Mastodon, my preferred communication channel.